Analysing physiological biodata is often cumbersome, does often not have a fast turnaround and does not allow for collaborative annotation. In this blog post, I would like to present a lightweight collaborative web application that enables games user researchers, designers, and analysts to easily make use of biosignals as metrics for the evaluation of video games.

Over the last few years, an increasing number of studies in the field of games user research (GUR) have addressed the use of biometrics as a real-time measure to quantify aspects gameplay experience (e.g., emotional valence and arousal). However, only few studies explore possibilities to visualize biometrics in a way that yields meaningful and intuitively accessible insights for games user researchers in a lightweight and cost-efficient manner. In effort to fill this gap, we developed a novel web-based GUR tool for the collaborative analysis of gameplay videos, based on biosignal time series data that aims at facilitating video game evaluation procedures in both large- and small-scale settings.

In the video games industry, a rapidly growing and highly competitive market, a polished gameplay experience is one of the key factors for the economic success of a game title. Therefore, the evaluation of playability and gameplay experience, as a way to inform game designers and developers about the strengths and weaknesses of a game prototype, has become an integral part in the development cycle of many video game productions [8, 11]. Biometrics were shown to support such evaluation procedures by facilitating the process of identifying emotionally significant gameplay situations. However, available tools for the analysis of biometric player data are generally not easily accessible to small-scale development teams. We developed a new web-based tool to facilitate the collaborative interpretation of electrophysiological biosignals for the evaluation of gameplay videos in both large- and small-scale GUR settings.

The usefulness of biosignals as real-time indicators of emotional valence and arousal in video games has been demonstrated by both industrial [1, 2] and academic research [4, 7]. Psychophysiological measurement techniques have been shown to complement conventional qualitative evaluation techniques, such as post-hoc questionnaires and player interviews, by providing an unobtrusive and sensitive way to quantify emotion-relevant player data in real time [5]. Despite a growing body of literature about the statistical interpretation of psychophysiological signals [4, 7, 10] and the visualization of in-game event logs [9, 3], the visualization of biometric player data is still an under-represented topic in games user research. Only few visualization approaches harness the potential of psychophysiological player data to gain insights about emotional components of gameplay experience. One of the few studies, addressing this research gap, puts forward the Biometric Storyboard approach [6], in which biometric and behavioral player data are combined with gameplay videos and player annotations to form a graph-like visualization of gameplay experience.

The tool I would like to present in this blog post is a collaborative web application enabling multiple users to analyze and annotate gameplay videos in an efficient manner, based on biometric player data. Featuring a user and access rights management system, the tool allows multiple annotators to analyze gameplay videos collaboratively, based on player-specific biometric time series data that was recorded during the gameplay session in question. Easy-to-use drag-and-drop interfaces allow users to upload video files and biosignal data for a given testing session. In a subsequent step, these data inputs will be processed automatically and visualized as an interactive annotation interface, inspired by the Biometric Storyboard approach.

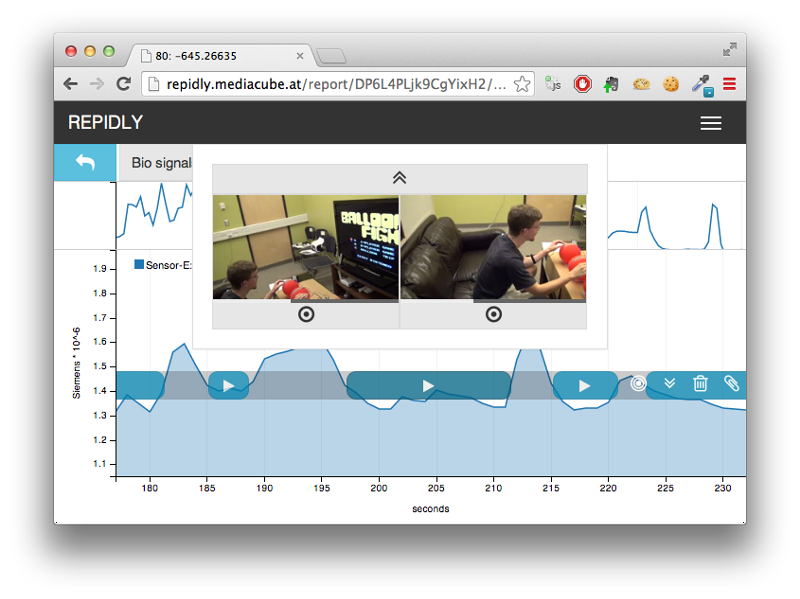

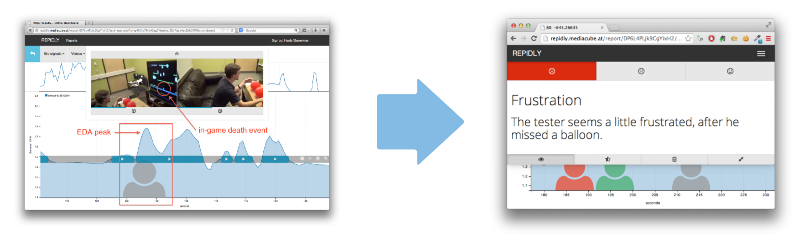

One of the core functions, offered by the tool, is that users can apply custom thresholds to the uploaded biosignal channels in order to efficiently identify gameplay situations exhibiting a potentially high emotional impact for the player. This is made possible by an interface that allows users to drag a threshold bar onto the currently active biosignal time series graph. The time segments, extracted by the thresholding mechanism, will be visualized in-place as play buttons that will trigger the corresponding excerpt of the gameplay video to be played back on the screen, thus informing the decision whether the given situation is relevant for the evaluation of the testing session or not.

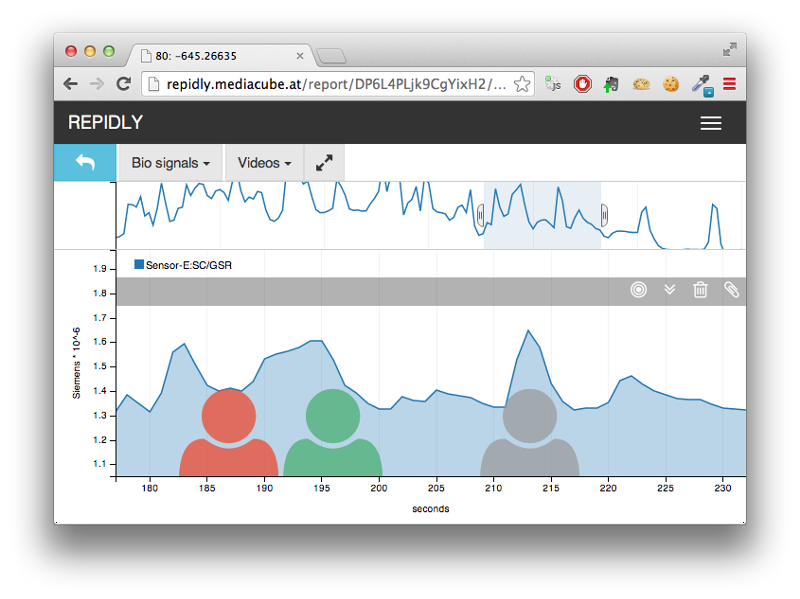

After the presentation of the video excerpt, it is left to the user to decide whether to add an annotation to the time series graph or not. An annotation may be added by clicking on the time series graph and is visualized as a player symbol at the selected time point in the biosignal recording. Annotations may be further specified by selecting the player’s sentiment (positive or negative), inferred by the analyst, and by adding a comment to the annotation.

In this post, I presented a new tool to facilitate the process of analyzing gameplay videos on the basis of biometric player data in a collaborative and efficient manner. The applicability of the proposed tool is not limited to games user research as such. Instead, the tool may be generally applied for usability and user experience analysis in settings where biosignals provide hints to help efficiently identify relevant intervals in long testing sessions for interactive systems. The fact that the web application enables remote collaboration and features support for various platforms caters to both large- and small-scale development teams.

References

[1] Mike Ambinder. 2011. Biofeedback in Gameplay: How Valve Measures Physiology to Enhance Gaming Experience. Presentation at GDC 2011. http://www.gdcvault.com/play/1014734/Biofeedback-in-Gameplay-How-Valve

[2] Pierre Chalfoun. 2013. Biometrics In Games For Actionable Results. Presentation at MIGS 2013.

[3] Magy Seif El-Nasr, Anders Drachen, and Alessandro Canossa. 2013. Game Analytics: Maximizing the Value of Player Data. Springer Publishing Company, Incorporated.

[4] J. Matias Kivikangas, Inger Ekman, Guillaume Chanel, Simo Järvelä, Ben Cowley, Pentti Henttonen, and Niklas Ravaja. 2010. Review on psychophysiological methods in game research. In Proc. of 1st Nordic DiGRA, DiGRA.

[5] P. Mirza-Babaei, Sebastian Long, Emma Foley, and G. McAllister. 2011. Understanding the contribution of biometrics to games user research. Proc. DIGRA (2011), 1–13. http://citeseerx.ist.psu.edu/viewdoc/download?rep=rep1&type=pdf&doi=10.1.1.224.9755

[6] P. Mirza-Babaei and L. E. Nacke. 2013. How does it play better?: exploring user testing and biometric storyboards in games user research. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems May (2013). http://dl.acm.org/citation.cfm?id=2466200

[7] Lennart E. Nacke, Mark N. Grimshaw, and Craig A. Lindley. 2010. More than a feeling: Measurement of sonic user experience and psychophysiology in a first-person shooter game. Interact. Comput. 22, 5 (sep 2010), 336–343. DOI:http://dx.doi.org/10.1016/j.intcom.2010.04.005

[8] Randy J. Pagulayan, Kevin Keeker, Dennis Wixon, Ramon L. Romero, and Thomas Fuller. 2003. User-centered Design in Games. In The Human-computer Interaction Handbook, Julie A Jacko and Andrew Sears (Eds.). L. Erlbaum Associates Inc., Hillsdale, NJ, USA, 883–906. http://dl.acm.org/citation.cfm?id=772072.772128

[9] Günter Wallner. 2013. Play-Graph: A Methodology and Visualization Approach for the Analysis of Gameplay Data. In 8th International Conference on the Foundations of Digital Games (FDG 2013). 253–260.

[10] Rina R. Wehbe, Dennis L. Kappen, David Rojas, Matthias Klauser, Bill Kapralos, and Lennart E. Nacke. 2013. EEG-based Assessment of Video and In-game Learning. In CHI ’13 Extended Abstracts on Human Factors in Computing Systems (CHI EA ’13). ACM, New York, NY, USA, 667–672. DOI: http://dx.doi.org/10.1145/2468356.2468474

[11] Veronica Zammitto. 2011. The Science of Play Testing: EA’s Methods for User Research. Presentation at GDC 2011. http://www.gdcvault.com/play/1014552/The-Science-of-Play-Testing